Machine learning (ML) models are increasingly influencing decisions in various sectors, from healthcare to finance to hiring practices. As these models become more integrated into decision-making processes, ensuring they are fair and unbiased has become a significant concern. The performance of machine learning models depends heavily on the data fed into them, and when that data reflects biases, the resulting predictions and decisions can perpetuate or even amplify these biases. In this article, we will explore the concepts of bias and fairness in machine learning, why they matter, and how data analysts can address them, especially those starting their journey through a data analyst internship.

Top Courses in Data Science Engineering

What is Bias in Machine Learning?

Bias in machine learning refers to the systematic error introduced by the data or algorithms, which results in models that unfairly favor certain outcomes over others. Bias can arise from various sources, including historical data that reflects societal inequalities, incomplete or skewed data, or flawed assumptions in the algorithm’s design.

For instance, consider a machine learning model designed to predict creditworthiness. If the historical data used to train the model contains biased decisions (e.g., discriminating against certain demographics based on race or gender), the model will likely reproduce and even perpetuate these biases. This is problematic because it can lead to unfair outcomes that adversely affect marginalized groups.

Types of Bias in Machine Learning

1. Data Bias: Data bias occurs when the data used to train a machine learning model is unrepresentative of the broader population or contains historical biases. For example, if a facial recognition system is trained on a dataset primarily consisting of white male faces, it may struggle to accurately recognize people of other races and genders.

2. Sampling Bias: This happens when certain groups are overrepresented or underrepresented in the data, leading to skewed predictions. In medical datasets, if a certain demographic is not adequately represented, the resulting models may be less accurate for that group.

3. Measurement Bias: Measurement bias happens when data is collected in a way that introduces inaccuracies. For example, if a survey asks people to self-report their income, those with lower incomes may be less likely to respond, leading to incomplete or inaccurate data.

4. Algorithmic Bias: Sometimes, the algorithm itself introduces bias by amplifying patterns in the data that may be biased. If an algorithm is optimized for a specific group of users or outcomes, it may inherently favor them over others.

Why Fairness in Machine Learning Matters

Fairness in machine learning aims to ensure that algorithms make decisions that are equitable for all individuals, regardless of race, gender, socioeconomic status, or other factors. Fairness is important for several reasons:

- Ethical Considerations: Fair algorithms promote social justice by helping ensure that decisions, such as loan approvals, hiring, or medical diagnoses, do not unfairly disadvantage certain groups.

- Legal Compliance: In many industries, such as finance and healthcare, discrimination based on certain characteristics (e.g., race or gender) is illegal. Machine learning models must therefore be designed to avoid discriminatory outcomes.

- Trust in AI: As machine learning models become more integrated into society, ensuring they are fair and unbiased is crucial for maintaining public trust in these systems.

Also Read: Rising Demand for Online Courses with Certificates Reflects Shift in Workforce Development

Get Courses: corporate finance

How to Address Bias and Ensure Fairness in Machine Learning Models

Addressing bias and ensuring fairness in machine learning models is an ongoing process. That requires careful consideration of the data, algorithm, and real-world impact. Here are some approaches to achieving fairness:

1. Diverse and Representative Data:

One of the most effective ways to reduce bias in machine learning models is to use diverse and representative datasets. This means including a wide variety of examples that cover all demographics relevant to the model’s application. For example, if you are developing a health prediction model. Include data from different age groups, genders, and ethnicities in the training dataset.

2. Bias Detection Tools:

Several tools and techniques can help identify and mitigate bias in machine learning models. These include fairness-aware algorithms and statistical tests that assess whether the model’s predictions differ across different demographic groups. Tools such as IBM’s AI Fairness 360 and Google’s What-If Tool can help developers visualize and measure fairness issues in their models.

3. Re-sampling and Re-weighting:

In cases of biased data, re-sampling (adjusting the dataset to balance underrepresented groups) or re-weighting (assigning more importance to underrepresented groups during training) can help ensure fairness. These methods help ensure that the model learns more about the underserved or historically marginalized groups.

4. Regular Audits and Monitoring:

Bias is not a one-time issue—new data or real-world deployment of models can cause it to evolve. Regular audits of machine learning models and ongoing monitoring are essential to detecting new biases as they arise. Data analysts, especially those in a data analyst internship, can play an essential role in this by analyzing model performance. Flag and address any disparities.

5. Incorporating Fairness Constraints:

Designers can build machine learning algorithms with fairness constraints to ensure that the model’s predictions meet certain fairness criteria. They can explicitly code these constraints into the algorithm to prevent the model from making biased decisions. Even if the training data contains some bias.

6. Transparency and Accountability:

To improve fairness, machine learning models must be transparent. Meaning that the reasoning behind their decisions should be explainable and understandable to the end-user. This is particularly important in sensitive applications like hiring, lending, and criminal justice. Data analysts working on these projects must ensure the models are interpretable and that users can challenge decisions. If they feel they are biased.

Online Courses with Certification

Online Courses with Certification

The Role of Data Analysts in Addressing Bias and Fairness

As a data analyst intern, you will likely work with machine learning models and may need to identify biases in the data or model performance. Here’s how you can contribute:

- Data Cleaning and Preprocessing: One of the key responsibilities of a data analyst is to clean and preprocess data. During this phase, you can identify and correct issues related to data bias. Such as missing values or imbalances in the data.

- Bias Audits: Perform audits on machine learning models to check for fairness. This includes checking for disparate impacts or accuracy disparities across demographic groups.

- Collaboration with Developers: As a data analyst intern, you will work closely with data scientists and engineers. Collaborating with them on building and testing models for fairness is critical to ensuring ethical decision-making.

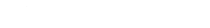

- Continuous Learning: Stay updated on the latest techniques and tools for addressing bias and fairness. Engage with platforms like EasyShiksha to access courses on advanced topics like ethical AI and fairness in machine learning.

Online Education Magazine in India, EasyShiksha Magazine

Online Education Magazine in India, EasyShiksha Magazine

Conclusion

Bias and fairness are fundamental concerns in the development and deployment of machine learning models. Ensuring that these models produce equitable outcomes requires careful attention to data, algorithms, and real-world implications. By understanding and addressing bias, machine learning practitioners—especially those starting their careers through a data analyst internship—can contribute to building more just and ethical systems. Platforms like EasyShiksha.com offer training and resources for budding data analysts to learn how to identify and address these challenges, helping to pave the way for more responsible use of AI and machine learning in society.